Participants:

- Anand Bhatia, CMO & Head Analytics, Fino Payment Bank

- Ankit Goenka, Head, – CX, Bajaj Allianz General Insurance

- Ankush Shah, Head – Marketing, Exponentia.ai

- Ashish Yadav, Head – BFSI, Exponentia.ai

- Atul Bhandari, Head – Digital Business, Tata AIA Life Insurance

- Hemant Dabke, Enterprise Head – India, Databricks

- Madhusudan Warrier, CTO, Mirae Asset Capital Markets (India)

- Pavan Patil, Head – IT Applications, Profectus Capital

- Preetam Vyas, Conglomerate Business, Databricks

- Ruchi Lamba, Sr Account Executive, Databricks

- Sankaranarayanan Raghavan, Chief Technology and Data Officer, IndiaFirst Life Insurance

- Shallu Kaushik, Head Digital, Tata Capital

- Subrata Das, Chief Innovation Officer, U GRO Capital

- Suyog Kulkarni, Head – Data Science, Sharekhan by BNP Paribas

- Vikas Dhankhar, CTO, NeoGrowth Credit

- Virendra Pal, Chief Data Science Officer, LenDenClub

- Vivek Badwaik, Director – Sales, Exponentia.ai

Manoj: Would you classify generative AI based transformation as a continuation of ongoing digital transformation, or a new wave?

Shankar: Gen AI is like an animal which needs to be tamed. It’s not a new breed of a domesticated animal; it’s a wild animal which needs to be tamed. It’s a very good tool but it will be a different transformation. It can’t be called as an extension to a digital transformation and needs different thinking all together.

Virendra: Even before the term GenAI was coined, we were using many AI algorithms, such as Monte Carlo model. We were doing probabilistic modelling where we can predict the future market-share basis the sequence of events. Now we start using this kind of algorithm in form of neural network to generate the new voices or new text. This new algorithm will be used in bigger way because more people understand it. It will evolve a long way because now it will have advanced use cases to support decision making.

Suyog: This definitely a continuation of the kind of model that we have been using. Earlier models were typically statistical models, regression models, etc. Then came supervised ML requiring a lot of labelled data. Large language models (LLMs) are slightly different. From supervised learning, we have moved to self-supervised learning through transformers – I think that will be a major change which is opening new use cases of 2 types. One for driving productivity and efficiency, and the other for automated decision making. The latter is some time away, but GenAi will bring a lot of efficiencies in productivity enhancements.

Vikas: GenAi will transform digital transformation itself. What used to be achieved through the conventional software based on the rules and logic is increasing getting transformed into data driven outcomes. I feel we will be moving from conventional softwares towards GenAI based solutions.

Manoj: What are some of the use cases that you’re already implementing or planning to implement?

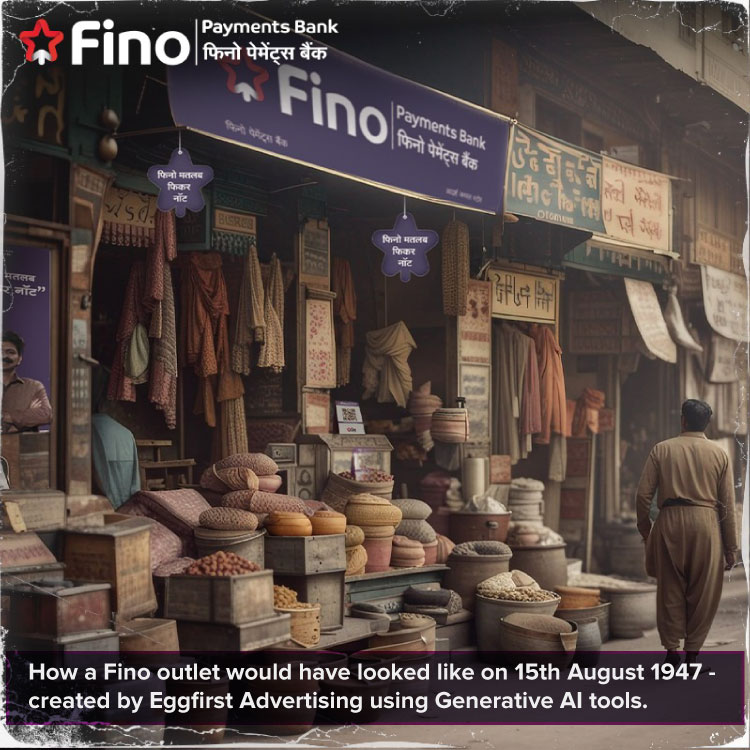

Anand: This is a very big area of work for us on 2 fronts. Generating content has become core to our marketing campaigns. In the last 4 months, 70-80% of the content that we have put out there is machine generated. We did a shoot for Independence Day as to how a Fino outlet would have looked like on 15th August 1947. It generated a huge response in the market and the feedback was pretty good. On the practical side, for many of our campaigns, the entire images that we’re using are AI generated. Increasingly we are using AI to write some of the notifications that we send out to customers.

I see this usage growing significantly in the days ahead. It does pose certain ethical issues at times as to who’s image is it and who is the person. It is driven by a combination of efficiency and quality of output and ability to personalize. It gives you variety, and variety is the name of the game when you’re engaging consumers online – they need to see something new regularly, and that’s why marketers invest in content. As for ML, it’s not so new, but it allows you more flexibility for renewal modelling, cross sell propensity, etc. ML is now paying dividends.

Ankit: I see it a 3 Vs that actually which are very relevant – voice, video and vernacular. You can hyper-personalize across these 3 form factors in real time. Take a very simple use case. Say, somebody is chatting with the call center agent in Malayalam. The agent does not understand Malayalam, but the technology is translating in real time into English, with some summary as well. The agent responding back in his native language, but the customer is actually seeing that or listening to that in Malayalam. It’s not science fiction, it really is happening. At the same time, you can generate real-time videos which could be personalized and be really informative. I personally feel video is the thing. We are already seeing video on all social media channels and the younger generation is used to this. So video will be the game changer. Without technology, having a wide geographical distribution will be very, very costly.

Shallu: Generative AI has opened opportunities both internally and externally. There are a plethora of uses in customer experience, marketing, efficiency drive, data science models, etc. But the question to be first addressed is the RoI followed by prioritization. In certain use cases, the RoI is not very obvious. The cost to output ratio in the current timing is something to look at. Down the road, the cost may come down. What we have currently implemented in our organization is more on customer experience and efficiency. Just to share some numbers, our earlier cognitive bot was able to respond about 76% first time right which has risen to 98%.

From an efficiency perspective, we are in the lending business, so there are several processes and opportunities where we believe that cognitive plus generative AI put together can help us bringing down the TAT for the customer and boost employee output.

Even for internal facing applications, like in HR, we have so many employees asking so many questions. We would have say 1 HR resource for very 300 employees, and if that work is handled by a bot, it will be a quite a game changer.

Another use case which we are testing is to do the first level interview for the entry level candidates to our organization, where we have an avatar sitting in front who will ask relevant questions. Basis the answers, can the avatar keep changing the next question to be asked. This way we believe will be able to get better candidates and the next round will be much easier.

Atul: BFSI companies talk to millions of customers. But when you look at who becomes our customer, when you take the funnel to that level, you know you’re actually talking of 10X-100X people. There the conversations also go beyond predictability. A customer conversation is easier to predict – he may want to know the rate for something, or he may want a certificate. But when you look at prospect conversations, they could be completely unpredictable. Those conversations are more flexible and closer to human conversations. The customer may just be trying to identify what is good for him – which is not a predictable path on a decision tree. Where Gen AI comes in and can become very powerful over a period of time, is that it can handle the se unpredictable conversations into some kind of a rhythm and then bring them into a structure which could potentially then improve costs, efficiencies and reach.

Ashish: Leveraging AI & AML to give prediction and intelligence to salespeople while they are chatting is something that Gen AI can do. So instead of giving the intelligence to the management level and trying out long term marketing campaign, you can trickle down the whole impact to the frontline sales person, which will give some ROI. You have to see how to make the intelligence trickle down to everyone, and if not, then limit it to certain levels. That is where you get the bang for the buck.

Hemant: For targeted marketing, our customers are already using several AI models to really focus on whom to target and how to target, and both are equally important. In banking and NBFCs, voice is predominant when it comes to campaigns and the amount of time and the money that you spend, even if it is 11 paise per call, is a ton of money. You need to look at when the delinquency will happen or when the customer will default, and so on. There is a lot of dependency on the external models for NBFCs because of dependence on the third-party scores vis-à-vis big banks building their own scorecards based on their vast customer database, which they are then using it for cross-sell and upsell. Your ability to train on your own data vis-à-vis training on external data – that’s another big area for our customers.

Manoj: How does Databricks solution work for old organizations with lots of legacy data and new organization with very little data?

Hemant: For us, it is about how well you are able to build models, how quickly you can build them, and how quickly you can deploy them. The strength of the platform comes from the ability to build, train and deploy. Size of the data really depends on the use case, not just the legacy. For example, a bank with 110 million customers who wants to build a rating score, will look at 110 million times x records. But an NBFC with 3-5 years of data is dependent on external scores. What models will do is help you do underwriting, look at all other channels, looking at cross app crawling, and help you get insights into the buying patterns – and hence your ability to quickly build the next best option.

Vivek: We are implementing data pipelines, BI and analytics for our customer. The complex cases are 10% and the simple cases are 50%, with 40% being in between. It is very important to know how fast one can deliver the implementation. With generative AI, organizations will need fewer technical resources and more people who have the business acumen to do the implementation. A project that took 6 months earlier can be done in 3 months using generative AI.

Manoj: Which of your normal analytics projects or traditional AI projects are likely to transform into generative AI projects in 12 months? Will you start projects from scratch or convert existing ones?

Subrata: The projects that will transform the fastest will be those where predictive AI and generative AI complement each other. In any process, we have a data assimilation, some prediction and some action which involves creation. Wherever this pattern manifests in the best possible way, those will turn the fastest. 2 specific things are of special interest to me. One is cross-sell. Traditionally, the way we do cross-sell it is always campaign-led and we target customers with a pitch. We expect that the customer needs that product at the time when we are reaching out to him/her. But fundamentally, the best sales is done when the customer wants the product and at the time he feels like contacting us. So the process is different – it is a series of communications to the customer, which will range from a completely non-sales pitch to a soft nudge to a pitch. To really achieve this engagement in a hyper-personalized way, generative AI would give a fantastic push. The second point is that for all this to succeed, it requires upskilling and learning in various parts of the organization.

Shallu: I believe we will not go full hog as we are regulated entities. For personal data led use cases, I still see them some time away because there are several regulations one has to keep in mind. I think it is more FAQ, content and marketing which will probably take off in next few months.

Manoj: Top three challenges?

Sankaranarayanan: Testing is a challenge because the system will be completely broad based, and it can go anywhere. In external facing systems, the context can go anywhere, which is a huge challenge. Internal use cases are more focused and hence easier.

Subrata: The points of failure cannot be quantified in predictive value. In Gen AI, we will not know what comes up if it goes wrong. It is very hard to quantify. One of the ways to do this will be to see what is the implication of an error. The projects that will kick off in the early stages are where the implication of any error will be relatively low.

Shallu: To achieve the business objectives from testing perspective, the right business person now needs to be put in place – the person who’s actually implementing the use case should know more about what is to be done. As far as testing for hallucinations, a businessperson can envisage these to some extent and better it over a period of time.

Preetam: Currently the way we teach the model is we teach what’s right, what’s correct, what’s happening, what’s good. We have not thought thought of teaching the model what’s not right, what’s not good, what does not fit in the ecosystem. If we don’t start thinking on those lines, then over a period of time, the hallucination will become a much bigger problem and create a lot of friction. Models don’t have beliefs, opinions, and emotions – they are pure binary.

Shallu: So, we need an ethical hacker kind of role.

Atul: In all customer transactions, usually money is involved. So, the accuracy level is paramount, especially when it is customer facing. The acceptance and the explosion of reach would really hinge on that. One needs to experiment before rolling out.

Anand: The built-in biases will start really creating a problem as we go forward. We will realize it as we go along. Certain companies may be ahead of the curve and may have already experienced it, but the biases will show up, because at the end of the day, there are several unknowns within these kinds of systems, and you don’t know who has triggered what. That is a challenge that one would see, whether it is in creative content or underwriting decision-making models. We in India will probably see a little more of it than in many other countries because of our variety, assumptions, heterogeneity, etc. For human beings, bias is not necessarily a negative, it can be a shortcut. So, biases or shortcuts may start playing out in manners that we may not have made envisaged.

Manoj: Is there a technology solution to minimize these biases?

Pavan: I believe ethics plays a role here. Biases have been always there, whether we are building today or we have built in past. As far as banking is concerned, trust matters. If your output levels are not accurate, trust goes away. AI may bring efficiency, but only ethics will bring trust. So there has to be a balance between ethics and using Gen AI tools. How we implement ethics as we implement Gen AI would be a crucial factor.

Manoj: Can technology help identify the bias, fix the bias, reduce the bias?

Vikas: On top of the output, you can have rule-based validations checks that can be implemented to minimize the impact of those biases.

Ashish: We are seeing clients putting more and more wrappers on top of the AI models, and then they’re doing it in 2 ways. One is to eliminate the wrong output and the other is to re-tune the model. So, every time they see a quick fluctuation within 15 days, they quickly tweak the rules on top of the model. For certain models, sometimes when we talk with clients, we say that you should do it with a wrapper, because the age-old models start throwing out poor results after some time.

Sankaranarayanan: 6 months ago, we trained our AI model on English, Hindi, Telugu and Gujarati. It did great on those languages, but then people started talking in Hinglish and it was unable to understand. Also, dialects of the same language are different. So now we are training it in colloquial language. We have been working on it for 3 months and awaiting a break through.

Ashish: When we developed a conversational analytics platform, the second language after English that we selected was Hinglish. Next, we did Marathi – that’s how we did it.

Suyog: As a part of preparedness for using Gen AI, most organizations will also have to revisit their entire data strategy and data ecosystems, ie, having the right architecture and doing the right catalog. Most of the thinking has gone into models and analytics, and less into data. There will be a requirement to look at data ability, to structure the data in a way that it can be used appropriately, while complying with Personal Data Protection Act and GDPR. Much work needs to be done around data pipeline and data architecture to enable us to unleash the power of generative AI.

Manoj: What about governance and compliance challenges?

Suyog: For the securities industry, there are several regulatory considerations before we put out content in the marketplace. We cannot say that a particular stock will go up by x%. It does require a compliance filter or a human filter to work on top of the Gen AI models.

Shallu: Equally important as governance and compliance is the brand. That has a big impact, maybe bigger than the governance and compliance, because it’s about your company’s image. I think that’s another challenge which has to be addressed with this because giving an incorrect answer, it can blow up to a big brand issue for the organization.

Virendra: Since a lot of alternate data is being used to create models, so having the right consent from the customer and whatever is used in the future, only that data you should capture. Earlier the thought was that whatever data comes, lets captured it and later we will think what to do with it. With data protection bill and other regulation, we should capture only those data points which are helpful.

Hemant: At Databricks, we saw the need of governance very early on and we built a phenomenal governance layer on top of both data engineering and data science. That offering is called Unity Catalog. Please check that out if you have governance related needs. We address data catalog, data management, data lineage, data consumption, user right, and much more. Traditionally, there has been a lot of governance built on the data engineering side. So, how do you bring the same lineage on the data science side, right, especially when you have AI models. Even when the data leaves your organization to some partner, how do you ensure that you have the lineage sitting on top of it? That is what we do. We introduced this less than 2 years ago and have more than 5000 customers today for this.

Manoj: Do you also have some kind of technology for the wrapper layer?

Hemant: Absolutely. Governance is the single most driving factor for all of the organizations going forward. You look at last five 5 years, all of the actions taken by RBI or IRDAI or any regulator in the country, they have to do with governance. And hence we firmly believe that governance is the front and center when you’re building your data architecture. It is really the foremost thing that every organization needs to account for when building a new architecture.

Manoj: What impediments do you see in starting new use cases and in scaling up? What kind of questions are CFO is asking?

Shallu: Opportunity versus realities is very wide right now. For many opportunities, when you look at the costing and RoI, eyebrows are being raised. I think the only way out right now is prioritization. Over a period of time, the cost has to come down.

Ankit: If you want to do it for scale, the costing will be taken care of. According to me there is no negative ROI. If scaling can be done, ROI will be resolved.

Suyog: As practitioners, we have to be razor sharp with our use cases and hence business translation is paramount. Everybody talks about data skills, math skills, etc, and we should also include business translation skills. When CFO asks whether you want to buy or build, my answer to that is you buy components to build solutions. And that’s been one the pull for me to attend this session. The infrastructure also has to take into consideration the new regulations that are coming up. For example, if a client wants it, you should be in a position to delete all his data in your system. It’s not just my organization being able to do it, it also applies to my partners and third-party providers who are helping me open my accounts and do transactions. All of this will require lots of help from the ecosystem members and partners.

Hemant: On the subject of scale, we were working with one of the largest banks. Earlier, they were taking about 11 weeks to build a model. Today they are deploying 2-3 models a week. On buy vs build, we are a platform, where our customers are really able to leverage all aspects of the platform. This will become a big differentiator. On ROI, many CDOs, CTOs, CIOs are clearly saying what they will do and what they won’t do. Much of that comes out of experimentation and learning. Their conversation has completely changed from cost to value.

Vivek: We have done 2-3 implementations on Gen AI and I will echo what Hemant has that said, customers are more concerned about value as compared to cost.

Manoj: BFSI has been slow to start using public cloud. Will Gen AI change that?

Shallu: BFSI companies will move to cloud only if there are big use cases coming their way.

Pavan: We are a 6-year old organization and we are completely on cloud, and we started on cloud. I see more and more organizations coming up on cloud, especially fintechs and new age NBFCs. Why – because the time to implementation is very fast. Overall, we require lesser manpower to manage the entire stuff. Cloud has its own benefits, and I don’t think AI has an influence on those decisions.

Manoj: Do you already have technologies that will help you start your Generative AI journey? What technologies do you think are essential to start?

Suyog: What is crucial is the ability to have the right data pipeline and data ecosystem in place. Many organizations have the data ecosystem required for classical statistical modelling or supervised machine learning. But for adopting Gen AI to its fullest capabilities, the data strategy and the data pipeline would have to undergo many changes, and that is where the real technology leverage of technologies will happen. The maths part of it is equally important but it is more manageable. Institutions like Databricks have a large role to play in making practitioners capable of unleashing the power of the data and also meeting the requirements of regulators across SEBI, RBI, IRDAI, or the upcoming Data Protection Authority.

Manoj: Does the Databricks platform focus only on new edge applications or are you able to support core banking and similar legacy applications as well?

Hemant: Our platform addresses data warehousing needs and data lake needs. We are the pioneer of the term lakehouse, which means it’s a single source of truth, which you can use both on the data engineering, ie static data coming in from your traditional sources like core banking, core insurance, ERP, etc, and marry that with the voice, video, unstructured, semi-structured data which is getting created today. A large amount of data which is happening today is actually from the unstructured and semi-structured sources. So the ability to integrate that is what differentiates us as Databricks. There is governance on top of it and a whole host of services that we offer.

Manoj: How much the work that Exponentia does involves data management and similar work?

Vivek: We are leveraging Databricks as a platform there as well. We are implementing Mosaic ML as one of the Gen AI components, which is an LLM based platform. In one of the recent use cases, the client has a large number of products and new products keep coming, so we had to enable the sales team as part of revenue maximization.

Manoj: Thank you all for an insightful discussion. Thank you very much.